Improving In-Person Assessments

Jul 10, 2019 Dave Boldt 0 Analytics, Assessment, Big Data, Maritime TrainingPhoto above taken by rawpixel.com on Pexels.com

Introduction

In-person assessments are a vital measure of individual and team performance in any safety-critical operation. In the maritime context this covers a very large number of tasks. When assessments are done well, they can be of immense value to the assessed individual and to the company. For the individual: highlighting areas where they may need remedial training or where they excel. And for the company: showing where training or procedures may fall short or be non-existent.

Doing in-person assessments well, however, is exceptionally difficult, especially with the way the maritime industry traditionally conducts them. Unless an assessment is truly objective and the information garnered is collected in a meaningful way, they have very limited worth. The power of well-executed assessments is not only in checking an individual or a team’s ability to perform a task, it is in understanding that performance across a broad range of people, and using that understanding to improve training, policy, hiring practices and more. Doing so helps to improve overall safety performance of a company in a provable, and repeatable way. After all, anecdotal evidence is less than ideal when making important decisions on training and policy.

The Current State

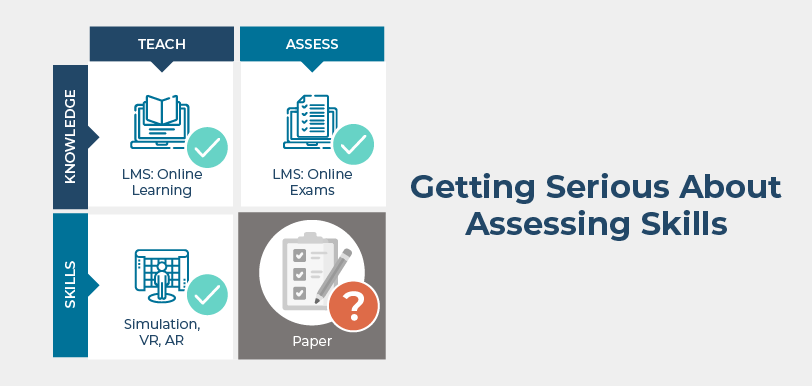

The current issues with assessments are the format of the assessments themselves and the techniques used to perform them. The problem comes down to two critical points: objectivity and data collection.

In-person assessments are not usually designed with subjectivity in mind. Most assessment formats follow relatively familiar processes. Small tasks are bundled into categories, and an assessor must very quickly decide if the individual or team has performed based on whether they have added to, distracted from, or been neutral to that process (a ‘1-2-3’ rating scale). An alternative, even more difficult, assessment format has an assessor quickly grading on a ‘1 to 5’ scale (Excellent – Exceeding expectations – Meeting expectations – Needs improvement – Unsatisfactory). These commonplace formats are highly conducive to assessor variation and subjectivity.

Assessor training and using rubrics can help reduce subjectivity, and so does using a single assessor for all performance assessments. However, training can only do so much, rubrics require more time to use (difficult in fast-paced assessments) and having a single assessor is often not logistically possible to maintain, especially for a larger crew. Even with a single assessor using the same grading form for an entire group, there can be too much variance with this format to make the data gathered truly reliable.

Moving onto data collection, assessments nowadays remain almost exclusively an exercise of paper checklists. Along with the hassle of managing paperwork (printing, collecting, filing, etc.), paper checklists make it difficult to aggregate data with other assessments. The lack of aggregation means that results are often not compared in-depth and correlated to actual crew performance during operation.

Ways to Improve

With all that said, improving in-person assessments and enabling them to be more widely usable requires increased objectivity and a way to capture all the data in an efficient manner. The former means reducing subjective assessment as much as possible and one way to do so is by using a binary (yes/no) grading scheme to assessment questions. The latter (capturing data) can mean moving away from paper checklists and using software to assist assessors and to parse the data.

While using software to reduce paperwork is an obvious solution to most, the binary grading scheme may raise some eyebrows. On the face of it, limiting the answers to two choices (“Yes/No”) may seem to lessen the scope of an assessment and decrease granularity. However, if you break down a skill into specific observable behaviours, use groupings of skills for broader knowledge sets, add weighting to each behaviour, and combine them all with the right algorithm, then you can unlock the same level of detail in the more traditional formats (e.g. a rating scale of 1 to 5). The binary grading scheme removes assessor variation and ambiguity, as the assessor can only say if they saw a behaviour or not. It helps speed up the assessment process itself as assessors do not need time to ‘grade’ and rate an action. It also gives the organization a very fine-grained and deep understanding of individual and team performance, as you can drill down to specific actions and whether or not they occurred when reviewing assessments.

It is easy to see how technology can help: from reducing paperwork and allowing assessors to efficiently mark the observed behaviour, to parsing large amounts of data for analytics. Imagine a large maritime operator using this across many job titles, tasks and functions. Over time, using software to help collect the data will produce a minimum safety baseline and can help highlight issues that fall outside of that baseline. A simple chart report or graph view of the data can show the operator where training resources need to be focused, and just as importantly where they do not. Do top performers need to fly half way around the world for expensive simulation days when you can objectively see that they are performing to standards during on-the-job assessments, as they have been trained? Whether it is training, policy and guidance, company culture, or personnel, any issue can be highlighted and acted on with the right software, in such a way that no other form of assessment can deliver.

Conclusion

It is time for assessment to “come of age” in the maritime industry; abandoning paper-checklist-style assessments (and their associated ambiguity) and adopting modern technology built for the purpose will ensure that all of the “practice” performed at sea becomes truly valuable training. Proper tracking of this data will allow safety and real performance come into the light, and enable year over year improvements based on actual performance, not anecdotal evidence.

Follow this Blog!

Receive email notifications whenever a new maritime training article is posted. Enter your email address below:

Interested in Marine Learning Systems?

Contact us here to learn how you can upgrade your training delivery and management process to achieve superior safety and crew performance.